Skull Stripping

Skull stripping is the process of removing the skull from brain MRI images to isolate the brain for further analysis.

Papers and Code

MindGrab for BrainChop: Fast and Accurate Skull Stripping for Command Line and Browser

Jun 13, 2025We developed MindGrab, a parameter- and memory-efficient deep fully-convolutional model for volumetric skull-stripping in head images of any modality. Its architecture, informed by a spectral interpretation of dilated convolutions, was trained exclusively on modality-agnostic synthetic data. MindGrab was evaluated on a retrospective dataset of 606 multimodal adult-brain scans (T1, T2, DWI, MRA, PDw MRI, EPI, CT, PET) sourced from the SynthStrip dataset. Performance was benchmarked against SynthStrip, ROBEX, and BET using Dice scores, with Wilcoxon signed-rank significance tests. MindGrab achieved a mean Dice score of 95.9 with standard deviation (SD) 1.6 across modalities, significantly outperforming classical methods (ROBEX: 89.1 SD 7.7, P < 0.05; BET: 85.2 SD 14.4, P < 0.05). Compared to SynthStrip (96.5 SD 1.1, P=0.0352), MindGrab delivered equivalent or superior performance in nearly half of the tested scenarios, with minor differences (<3% Dice) in the others. MindGrab utilized 95% fewer parameters (146,237 vs. 2,566,561) than SynthStrip. This efficiency yielded at least 2x faster inference, 50% lower memory usage on GPUs, and enabled exceptional performance (e.g., 10-30x speedup, and up to 30x memory reduction) and accessibility on a wider range of hardware, including systems without high-end GPUs. MindGrab delivers state-of-the-art accuracy with dramatically lower resource demands, supported in brainchop-cli (https://pypi.org/project/brainchop/) and at brainchop.org.

Skull stripping with purely synthetic data

May 12, 2025While many skull stripping algorithms have been developed for multi-modal and multi-species cases, there is still a lack of a fundamentally generalizable approach. We present PUMBA(PUrely synthetic Multimodal/species invariant Brain extrAction), a strategy to train a model for brain extraction with no real brain images or labels. Our results show that even without any real images or anatomical priors, the model achieves comparable accuracy in multi-modal, multi-species and pathological cases. This work presents a new direction of research for any generalizable medical image segmentation task.

How We Won the ISLES'24 Challenge by Preprocessing

May 23, 2025Stroke is among the top three causes of death worldwide, and accurate identification of stroke lesion boundaries is critical for diagnosis and treatment. Supervised deep learning methods have emerged as the leading solution for stroke lesion segmentation but require large, diverse, and annotated datasets. The ISLES'24 challenge addresses this need by providing longitudinal stroke imaging data, including CT scans taken on arrival to the hospital and follow-up MRI taken 2-9 days from initial arrival, with annotations derived from follow-up MRI. Importantly, models submitted to the ISLES'24 challenge are evaluated using only CT inputs, requiring prediction of lesion progression that may not be visible in CT scans for segmentation. Our winning solution shows that a carefully designed preprocessing pipeline including deep-learning-based skull stripping and custom intensity windowing is beneficial for accurate segmentation. Combined with a standard large residual nnU-Net architecture for segmentation, this approach achieves a mean test Dice of 28.5 with a standard deviation of 21.27.

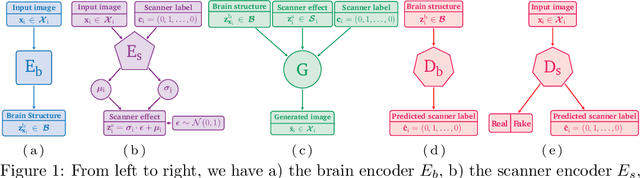

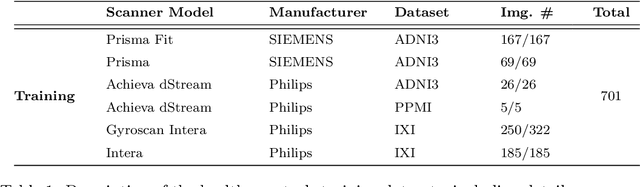

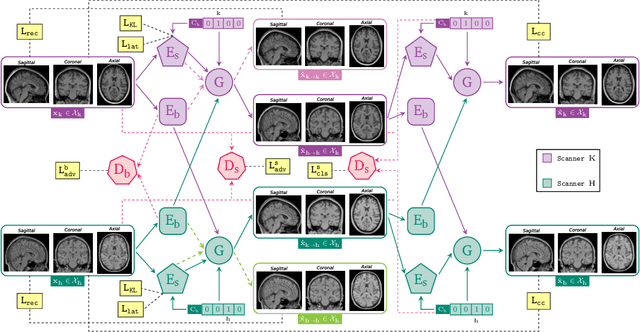

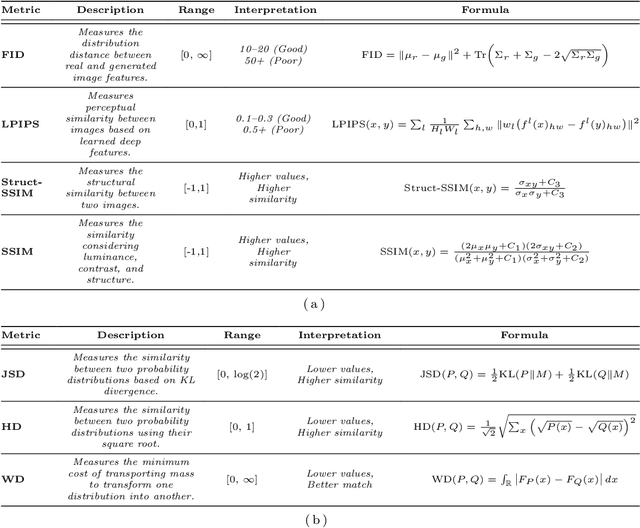

DISARM++: Beyond scanner-free harmonization

May 06, 2025

Harmonization of T1-weighted MR images across different scanners is crucial for ensuring consistency in neuroimaging studies. This study introduces a novel approach to direct image harmonization, moving beyond feature standardization to ensure that extracted features remain inherently reliable for downstream analysis. Our method enables image transfer in two ways: (1) mapping images to a scanner-free space for uniform appearance across all scanners, and (2) transforming images into the domain of a specific scanner used in model training, embedding its unique characteristics. Our approach presents strong generalization capability, even for unseen scanners not included in the training phase. We validated our method using MR images from diverse cohorts, including healthy controls, traveling subjects, and individuals with Alzheimer's disease (AD). The model's effectiveness is tested in multiple applications, such as brain age prediction (R2 = 0.60 \pm 0.05), biomarker extraction, AD classification (Test Accuracy = 0.86 \pm 0.03), and diagnosis prediction (AUC = 0.95). In all cases, our harmonization technique outperforms state-of-the-art methods, showing improvements in both reliability and predictive accuracy. Moreover, our approach eliminates the need for extensive preprocessing steps, such as skull-stripping, which can introduce errors by misclassifying brain and non-brain structures. This makes our method particularly suitable for applications that require full-head analysis, including research on head trauma and cranial deformities. Additionally, our harmonization model does not require retraining for new datasets, allowing smooth integration into various neuroimaging workflows. By ensuring scanner-invariant image quality, our approach provides a robust and efficient solution for improving neuroimaging studies across diverse settings. The code is available at this link.

PhaseGen: A Diffusion-Based Approach for Complex-Valued MRI Data Generation

Apr 10, 2025Magnetic resonance imaging (MRI) raw data, or k-Space data, is complex-valued, containing both magnitude and phase information. However, clinical and existing Artificial Intelligence (AI)-based methods focus only on magnitude images, discarding the phase data despite its potential for downstream tasks, such as tumor segmentation and classification. In this work, we introduce $\textit{PhaseGen}$, a novel complex-valued diffusion model for generating synthetic MRI raw data conditioned on magnitude images, commonly used in clinical practice. This enables the creation of artificial complex-valued raw data, allowing pretraining for models that require k-Space information. We evaluate PhaseGen on two tasks: skull-stripping directly in k-Space and MRI reconstruction using the publicly available FastMRI dataset. Our results show that training with synthetic phase data significantly improves generalization for skull-stripping on real-world data, with an increased segmentation accuracy from $41.1\%$ to $80.1\%$, and enhances MRI reconstruction when combined with limited real-world data. This work presents a step forward in utilizing generative AI to bridge the gap between magnitude-based datasets and the complex-valued nature of MRI raw data. This approach allows researchers to leverage the vast amount of avaliable image domain data in combination with the information-rich k-Space data for more accurate and efficient diagnostic tasks. We make our code publicly $\href{https://github.com/TIO-IKIM/PhaseGen}{\text{available here}}$.

Pfungst and Clever Hans: Identifying the unintended cues in a widely used Alzheimer's disease MRI dataset using explainable deep learning

Jan 27, 2025

Backgrounds. Deep neural networks have demonstrated high accuracy in classifying Alzheimer's disease (AD). This study aims to enlighten the underlying black-box nature and reveal individual contributions of T1-weighted (T1w) gray-white matter texture, volumetric information and preprocessing on classification performance. Methods. We utilized T1w MRI data from the Alzheimer's Disease Neuroimaging Initiative to distinguish matched AD patients (990 MRIs) from healthy controls (990 MRIs). Preprocessing included skull stripping and binarization at varying thresholds to systematically eliminate texture information. A deep neural network was trained on these configurations, and the model performance was compared using McNemar tests with discrete Bonferroni-Holm correction. Layer-wise Relevance Propagation (LRP) and structural similarity metrics between heatmaps were applied to analyze learned features. Results. Classification performance metrics (accuracy, sensitivity, and specificity) were comparable across all configurations, indicating a negligible influence of T1w gray- and white signal texture. Models trained on binarized images demonstrated similar feature performance and relevance distributions, with volumetric features such as atrophy and skull-stripping features emerging as primary contributors. Conclusions. We revealed a previously undiscovered Clever Hans effect in a widely used AD MRI dataset. Deep neural networks classification predominantly rely on volumetric features, while eliminating gray-white matter T1w texture did not decrease the performance. This study clearly demonstrates an overestimation of the importance of gray-white matter contrasts, at least for widely used structural T1w images, and highlights potential misinterpretation of performance metrics.

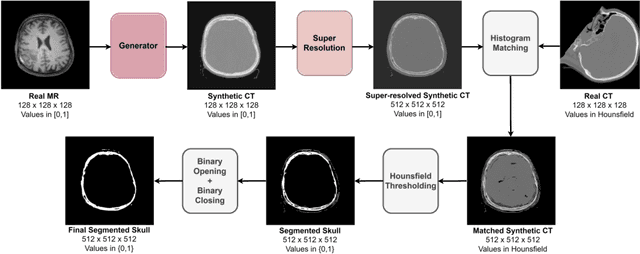

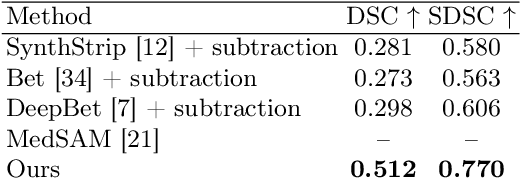

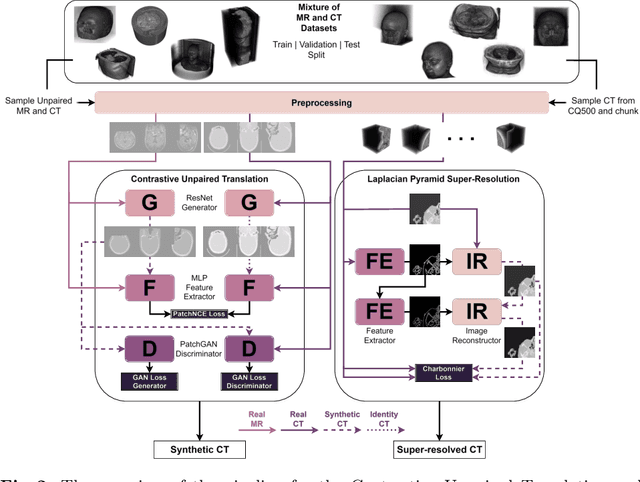

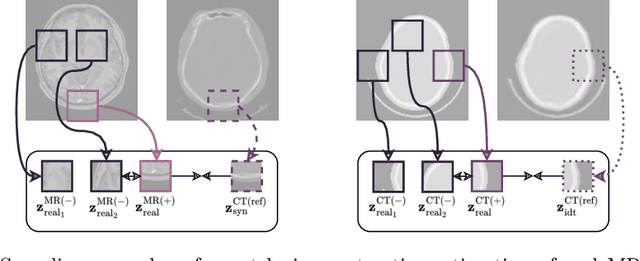

Unsupervised Skull Segmentation via Contrastive MR-to-CT Modality Translation

Oct 17, 2024

The skull segmentation from CT scans can be seen as an already solved problem. However, in MR this task has a significantly greater complexity due to the presence of soft tissues rather than bones. Capturing the bone structures from MR images of the head, where the main visualization objective is the brain, is very demanding. The attempts that make use of skull stripping seem to not be well suited for this task and fail to work in many cases. On the other hand, supervised approaches require costly and time-consuming skull annotations. To overcome the difficulties we propose a fully unsupervised approach, where we do not perform the segmentation directly on MR images, but we rather perform a synthetic CT data generation via MR-to-CT translation and perform the segmentation there. We address many issues associated with unsupervised skull segmentation including the unpaired nature of MR and CT datasets (contrastive learning), low resolution and poor quality (super-resolution), and generalization capabilities. The research has a significant value for downstream tasks requiring skull segmentation from MR volumes such as craniectomy or surgery planning and can be seen as an important step towards the utilization of synthetic data in medical imaging.

BrainMorph: A Foundational Keypoint Model for Robust and Flexible Brain MRI Registration

May 22, 2024We present a keypoint-based foundation model for general purpose brain MRI registration, based on the recently-proposed KeyMorph framework. Our model, called BrainMorph, serves as a tool that supports multi-modal, pairwise, and scalable groupwise registration. BrainMorph is trained on a massive dataset of over 100,000 3D volumes, skull-stripped and non-skull-stripped, from nearly 16,000 unique healthy and diseased subjects. BrainMorph is robust to large misalignments, interpretable via interrogating automatically-extracted keypoints, and enables rapid and controllable generation of many plausible transformations with different alignment types and different degrees of nonlinearity at test-time. We demonstrate the superiority of BrainMorph in solving 3D rigid, affine, and nonlinear registration on a variety of multi-modal brain MRI scans of healthy and diseased subjects, in both the pairwise and groupwise setting. In particular, we show registration accuracy and speeds that surpass current state-of-the-art methods, especially in the context of large initial misalignments and large group settings. All code and models are available at https://github.com/alanqrwang/brainmorph.

Domain Influence in MRI Medical Image Segmentation: spatial versus k-space inputs

Jul 01, 2024

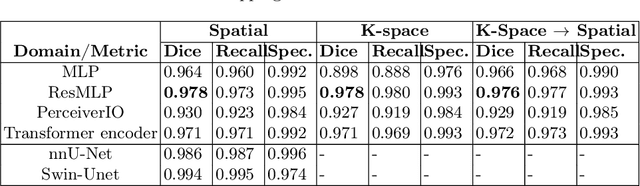

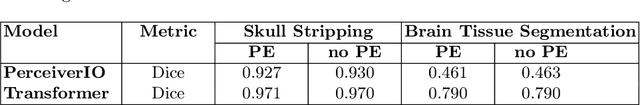

Transformer-based networks applied to image patches have achieved cutting-edge performance in many vision tasks. However, lacking the built-in bias of convolutional neural networks (CNN) for local image statistics, they require large datasets and modifications to capture relationships between patches, especially in segmentation tasks. Images in the frequency domain might be more suitable for the attention mechanism, as local features are represented globally. By transforming images into the frequency domain, local features are represented globally. Due to MRI data acquisition properties, these images are particularly suitable. This work investigates how the image domain (spatial or k-space) affects segmentation results of deep learning (DL) models, focusing on attention-based networks and other non-convolutional models based on MLPs. We also examine the necessity of additional positional encoding for Transformer-based networks when input images are in the frequency domain. For evaluation, we pose a skull stripping task and a brain tissue segmentation task. The attention-based models used are PerceiverIO and a vanilla Transformer encoder. To compare with non-attention-based models, an MLP and ResMLP are also trained and tested. Results are compared with the Swin-Unet, the state-of-the-art medical image segmentation model. Experimental results show that using k-space for the input domain can significantly improve segmentation results. Also, additional positional encoding does not seem beneficial for attention-based networks if the input is in the frequency domain. Although none of the models matched the Swin-Unet's performance, the less complex models showed promising improvements with a different domain choice.

Boosting Skull-Stripping Performance for Pediatric Brain Images

Feb 26, 2024

Skull-stripping is the removal of background and non-brain anatomical features from brain images. While many skull-stripping tools exist, few target pediatric populations. With the emergence of multi-institutional pediatric data acquisition efforts to broaden the understanding of perinatal brain development, it is essential to develop robust and well-tested tools ready for the relevant data processing. However, the broad range of neuroanatomical variation in the developing brain, combined with additional challenges such as high motion levels, as well as shoulder and chest signal in the images, leaves many adult-specific tools ill-suited for pediatric skull-stripping. Building on an existing framework for robust and accurate skull-stripping, we propose developmental SynthStrip (d-SynthStrip), a skull-stripping model tailored to pediatric images. This framework exposes networks to highly variable images synthesized from label maps. Our model substantially outperforms pediatric baselines across scan types and age cohorts. In addition, the <1-minute runtime of our tool compares favorably to the fastest baselines. We distribute our model at https://w3id.org/synthstrip.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge